Our goal for this testing was to get a relatively wide sweep of CPUs tested so that we could find any potential shortcomings of the testing approach. This test suite has gone through a few months of validation, so it’s time to try it out in the real world. It takes us about 6 months to a year to change our testing methodology, as we try to stick with a very trustworthy set of tests before introducing potential new variables. We understand that many of you have other requests still awaiting fulfillment, and want you to know that, as long as you tweet them at us or post them on YouTube, there is a good chance we see them. We’ve had a lot of requests to add some specific testing to our CPU suite, like program compile testing, and today marks our delivery of those requests. As new CPUs launch, we’ll continue adding their most immediate competitors (and the new CPUs themselves) to our list of tested devices. We don’t yet have a “full” list of CPUs, naturally, as this is a pilot of our new testing procedures for workstation benchmarks. We’re starting with a small list of popular CPUs and will add as we go. Today is the unveiling of half of our new testing methodology, with the games getting unveiled separately.

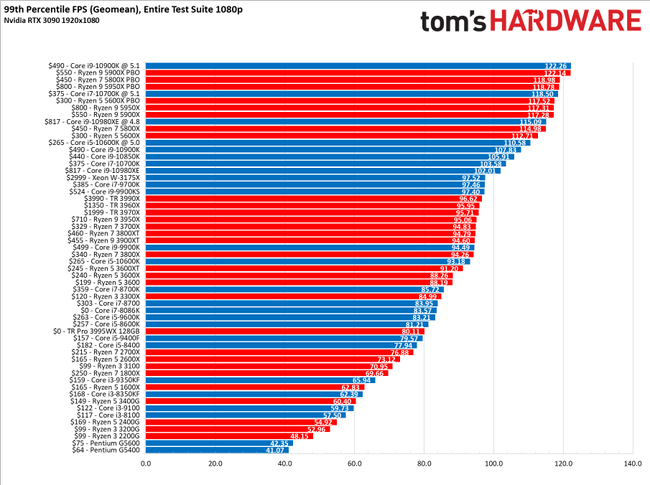

These are new for us, but we’ve added program compile workloads, Adobe Premiere, Photoshop, compression and decompression, V-Ray, and more. New testing includes more games than before, tested at two resolutions, alongside workstation benchmarks. This is an exciting milestone for us: We’ve completely overhauled our CPU testing methodology for 2019, something we first detailed in our GamersNexus 2019 Roadmap video.

0 kommentar(er)

0 kommentar(er)